Apache Airflow AI Assistant |

AI for Airflow & Workflow Orchestration

Transform your workflow orchestration with AI-powered Airflow assistance. Generate DAGs and data pipelines faster with intelligent assistance for workflow automation.

Trusted by data engineers and DevOps teams • Free to start

Why Use AI for Airflow Development?

Workflow orchestration requires complex DAGs. Our AI accelerates your pipeline development

DAG Creation

Build Directed Acyclic Graphs with tasks, dependencies, and scheduling

Operators

Use Python, Bash, SQL, and custom operators for diverse tasks

Scheduling

Schedule workflows with cron expressions and dynamic execution dates

Task Dependencies

Define complex task dependencies with upstream/downstream relationships

Integrations

Connect to AWS, GCP, Azure, databases, and third-party services

Monitoring

Track workflow execution, logs, and metrics through the Airflow UI

Frequently Asked Questions

What is Apache Airflow and how is it used for workflow orchestration?

Apache Airflow is an open-source platform for programmatically authoring, scheduling, and monitoring workflows as Directed Acyclic Graphs (DAGs). Airflow provides: Python-based DAG definition, rich UI for monitoring and troubleshooting, extensible operators and hooks, task dependencies and scheduling, retry mechanisms and alerting, and scalable architecture. Airflow is used for: ETL/ELT data pipelines, ML model training workflows, batch processing jobs, data warehouse updates, report generation, and multi-cloud orchestration. It's known for code-first approach, flexibility, and active community with extensive integrations.

How does the AI help with Airflow DAG creation?

The AI generates Airflow code including: DAG instantiation with default_args, task definitions with operators, task dependencies using >> and <<, dynamic DAG generation, XCom for task communication, sensors for external events, and branching logic. It creates production-ready DAGs following best practices.

Can it help with Airflow operators and integrations?

Yes! The AI generates code for: PythonOperator for custom logic, BashOperator for shell commands, SqlOperator for database queries, cloud operators (S3, GCS, BigQuery), HTTP and email operators, and custom operator creation. It creates workflows integrating multiple systems.

Does it support Airflow deployment and scaling?

Absolutely! The AI understands Airflow ecosystem including: executor configuration (Local, Celery, Kubernetes), connection and variable management, Docker deployment, Kubernetes integration, monitoring and alerting setup, and performance optimization. It generates scalable Airflow workflows.

Start Building Workflows with AI

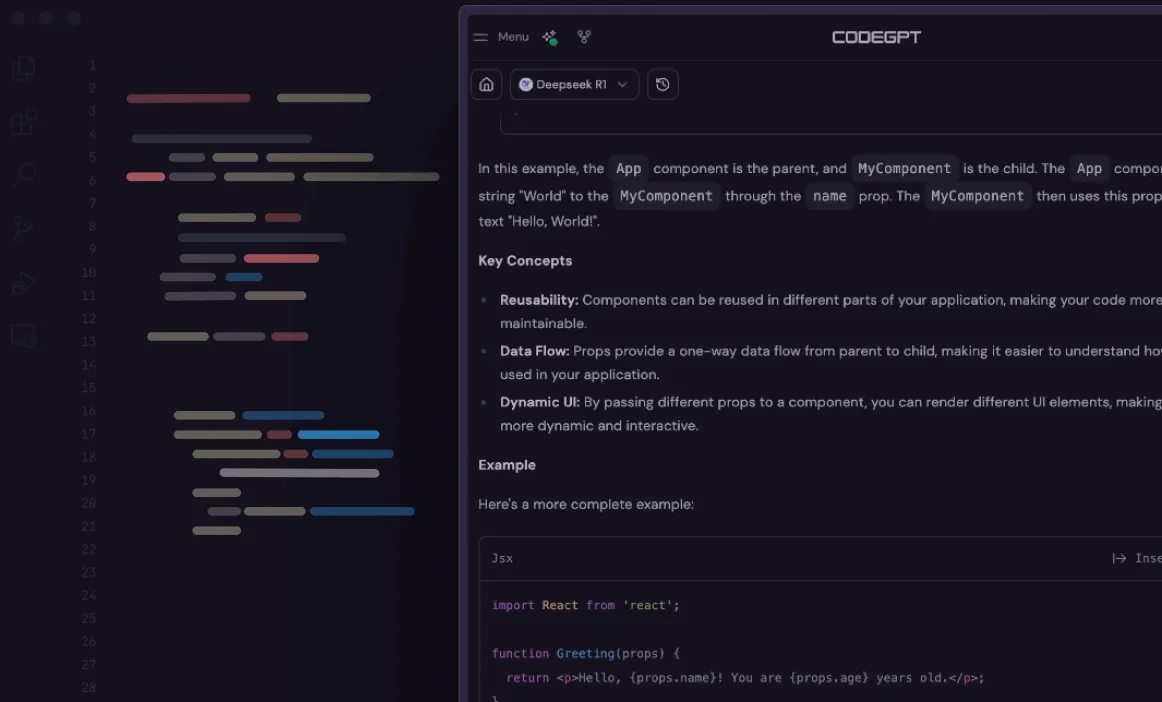

Download CodeGPT and accelerate your Airflow development with intelligent DAG code generation

Download VS Code ExtensionFree to start • No credit card required

Workflow Orchestration Services?

Let's discuss custom Airflow pipelines, data orchestration, and automation solutions

Talk to Our TeamAirflow DAGs • Data orchestration